About me

★ I am currently looking for academic and industry positions. Feel free to reach out if you are interested in chatting or collaborating. I am always open to new ideas and opportunities.

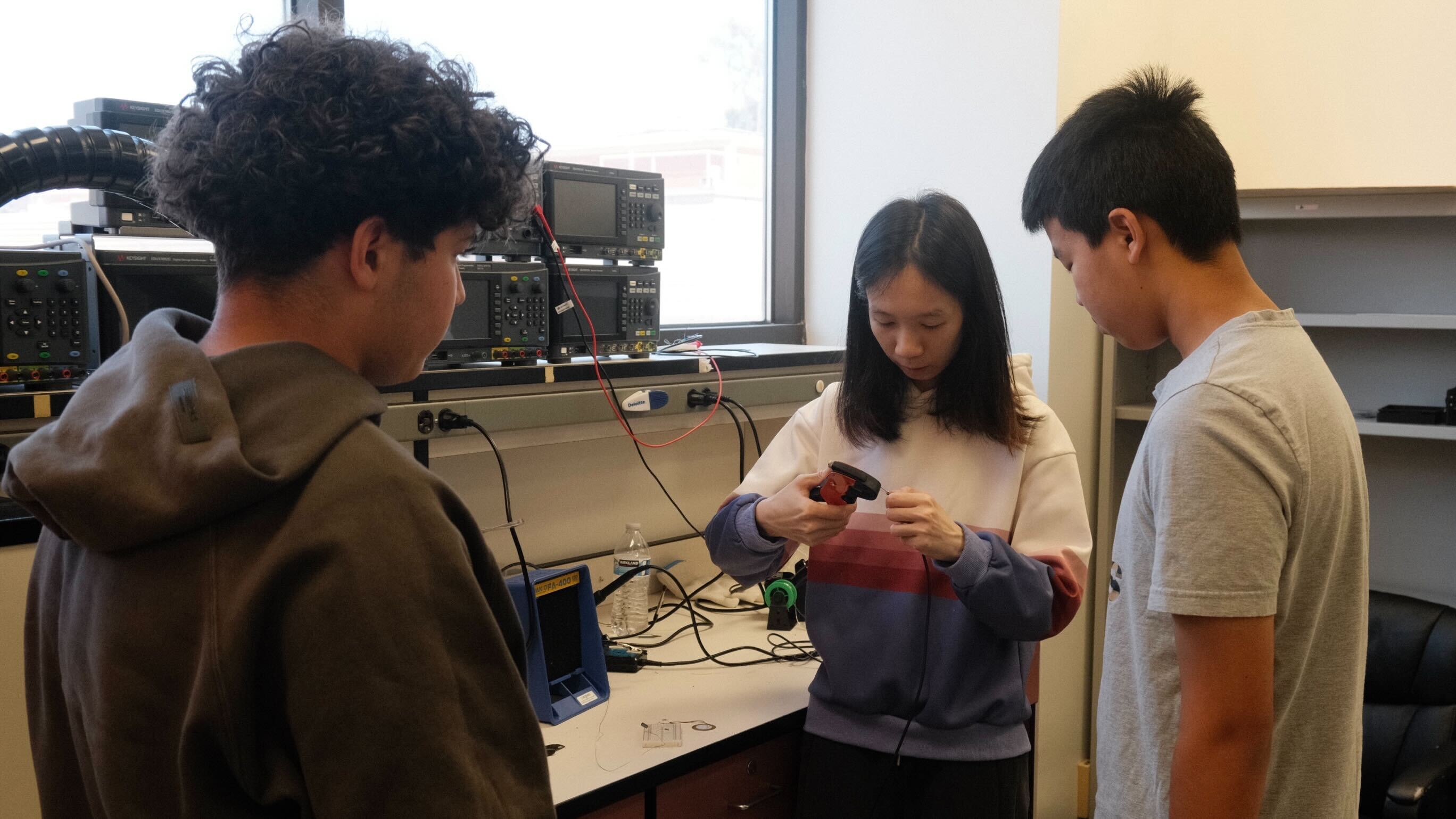

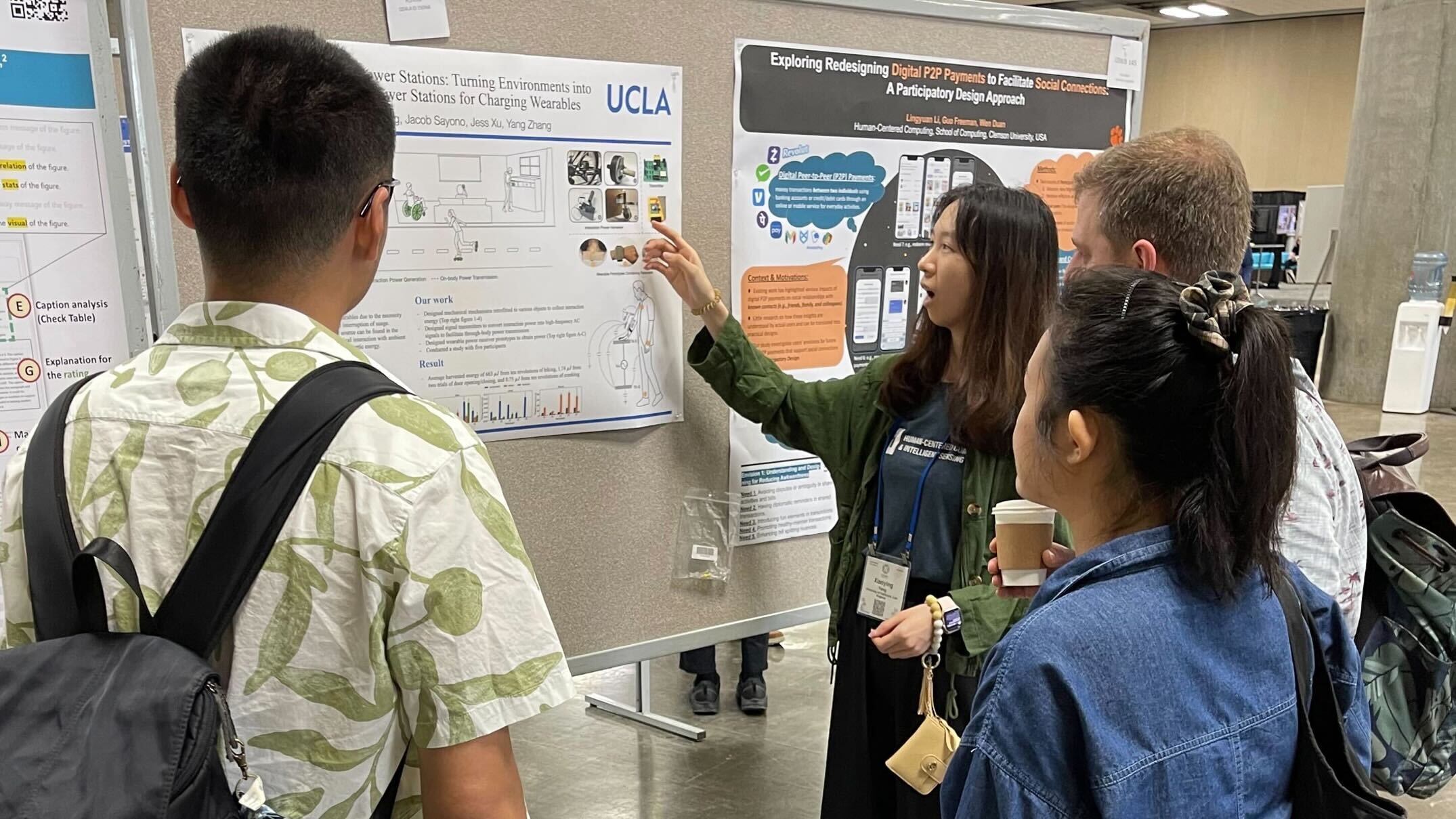

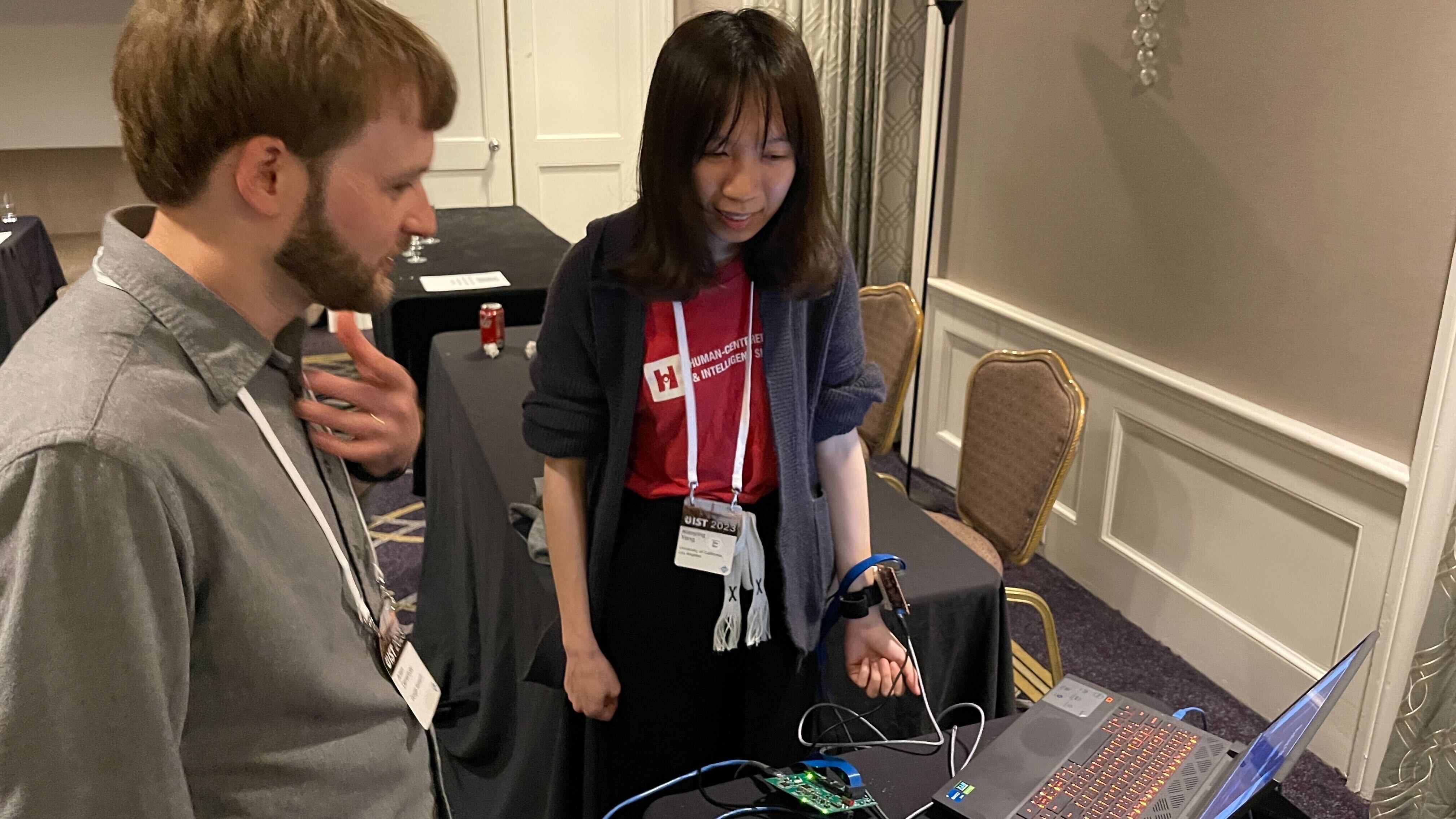

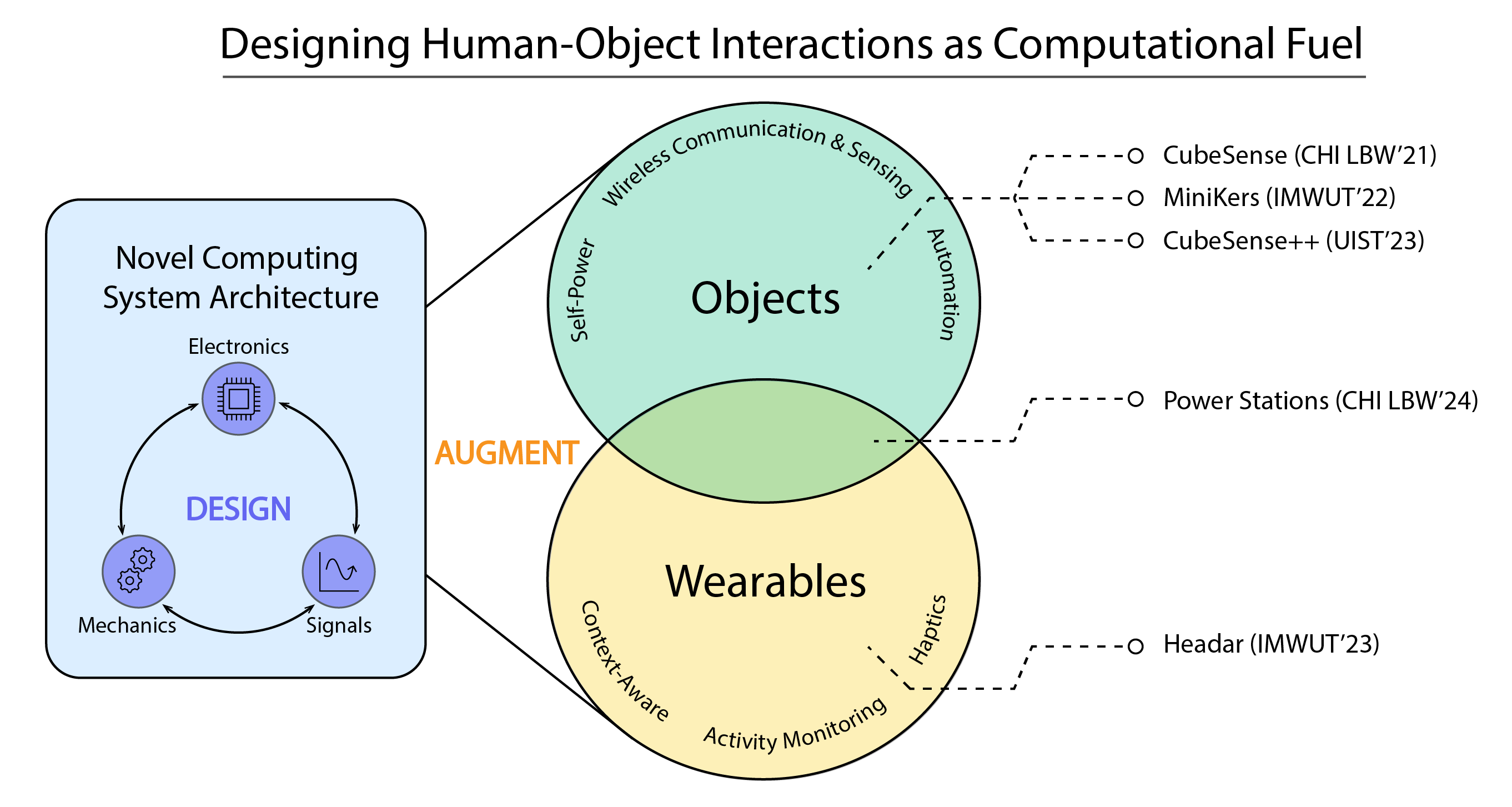

I am a PhD candidate at the Human-Centered Computing & Intelligent Sensing Lab (HiLab), Electrical and Computer Engineering (ECE), University of California, Los Angeles (UCLA), advised by Professor Yang Zhang. My research is motivated by the vision of ubiquitous computing, where technology will be seamlessly blending into everyday interactions. My background lies in Human-Computer Interaction (HCI), and my dissertation focuses on how the energy and information flow during physical human-object interactions can be utilized to enhance the context-awareness, connectivity and ubiquity of embedded computing systems. I take a system-level research approach, designing both hardware, sensing signals and algorithms, to create solutions that make physical systems low-cost, energy-efficient, intelligent, and easy to use.

I am a future technology imaginer, passionate about being part of researchers and engineers who are bringing J.A.R.V.I.S. (Just A Rather Very Intelligent System) closer to reality. My PhD training in HCI lets me go beyond daydreaming, turning ideas into tangible, functional prototypes by blending creativity, research, and engineering. My work often integrates computer vision, machine learning, electronics and hardware to deliver innovative solutions. I envision software intelligence and physical devices being developed jointly to truly support everyday tasks and enrich real-life experiences through enjoyable and meaningful interactions.

Outreach